When JSTOR Senior User Researcher Jennifer Saville reflects on the development of JSTOR Seeklight–the new, AI-assisted collection processing tool at the heart of JSTOR Digital Stewardship Services–she doesn’t start with the technology. Rather, she starts by sharing a recurring challenge that library professionals face every day:

“What’s on the drive?”

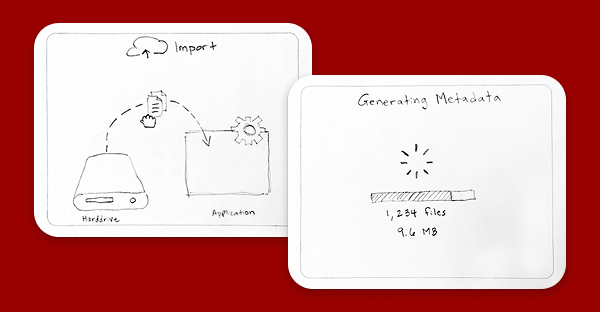

“A professor retires, a university president steps down, or an archive acquires a new collection,” Saville explains. “Along with boxes of papers, there’s often a hard drive, or several. No one’s quite sure what’s on it. The challenge isn’t just preserving the files. It’s understanding what might be valuable for research, describing them, and making them discoverable.”

This dilemma, echoed in conversations with institutions worldwide, was part of a much larger problem: the backlog of unprocessed material is overwhelming, and keeps growing.

“Archivists and librarians aren’t just managing historical collections,” Saville notes. “They’re facing a constant influx of new materials—born-digital records, digitized collections, donations—without enough time or staff to process them all.”

Previously, JSTOR had focused on the discovery and reach stage of digital archival materials. But without initial processing, many materials never reached that stage.

“We realized there were frustrations we didn’t fully understand,” Saville recalls. “So instead of guessing, we went out and asked.”

This led to a fundamental shift in approach. Rather than creating just another tool, JSTOR sought to build a solution that was practical, effective, and shaped by the very people who would use it.

Listening first: A user-centered approach

JSTOR has been working with digital collections since 1995, when the first journal was digitized. More recently, its Open Community Collections program opened the JSTOR platform to institutions looking to share their unique primary source collections. But as JSTOR deepened these partnerships, it became clear that early-stage processing—the meticulous, labor-intensive work of organizing and describing collections—was a major bottleneck, and contributing to an ever-growing backlog of materials.

In early 2024, JSTOR conducted over 60 interviews with library leaders from institutions worldwide, to better understand the barriers and how to help.

“We had some ideas going in,” Saville says. “We brought concepts as a starting point for discussion. But through deep listening and probing into the ‘why’ behind the pain points, we realized the problem was bigger, and more nuanced, than we had initially thought.”

A key finding stood out:

“On average, less than 5% of collections are digitized. And even if they are, they’re often not described. If they’re not described, they’re not findable. If they’re not findable, they’re not usable.”

Digitization alone wasn’t enough. Without descriptive metadata, collections remained hidden.

And beyond metadata creation, there was another issue: workflow fragmentation.

“There are so many tools out there,” Saville explains. “No one tool does it all. Navigating the array of tools and platforms across a library, from homegrown systems to specialized software that does one thing really well, can be a barrier to a smooth collections processing workflow.”

This complexity can lead to inconsistent metadata, siloed workflows, and extra labor just to make collections usable–an insight that reshaped the path forward.

“We realized there was an opportunity for a technical intervention in metadata creation—one that will fit into a larger platform, but is flexible enough to streamline description workflows and could enhance existing metadata.”

That meant scrapping early ideas and building something new. Something truly shaped by community needs.

From concept to collaboration: Building with the community

To begin, JSTOR formed a small, dedicated team.

“It was just three of us,” Saville recalls. “We weren’t some giant tech team. We were sketching ideas on whiteboards, trying to figure out what would actually help.”

Early explorations built on existing efforts to use AI to improve content discovery within JSTOR. That work had shown how AI could enhance, rather than replace, human expertise, and reinforce critical thought rather than automating it. In fact, putting the human in the center of the intelligence became a guiding principle in the product development process.

This helped the team focus on a single core question:

Can AI assist with descriptive metadata in a way that enhances, rather than replaces, the work of archivists?

Early testing of prototypes confirmed the potential.

“From the beginning, there was immediate recognition of the value of accurate, succinct descriptions. It was seen as a huge time saver.”

But feedback surfaced challenges, too:

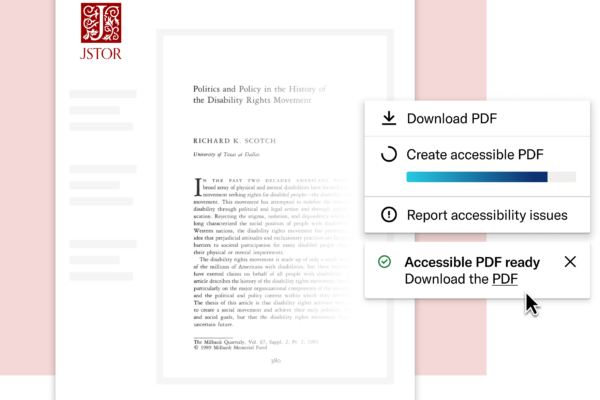

“One concern we heard was that the AI-generated metadata looked so polished that it could be easy to accept it without review,” Saville explains. “We needed built-in mechanisms to ensure human oversight—things like confidence scores, review labels, and clear workflows for evaluation.”

Meanwhile, JSTOR’s own metadata librarians conducted side-by-side qualitative reviews, analyzing AI-generated metadata field by field.

“We integrate internal expertise with insights from external experts to ensure a balanced approach. Our earliest experts review and refine before it even reaches users, which is crucial.”

This collaboration with internal experts enabled JSTOR to develop JSTOR Seeklight, a system that is fast, smart, and specifically designed to support—rather than replace—professional expertise.

Saville sees collaboration with archivists, librarians, and library leaders as essential to ensuring AI serves as a meaningful tool in collections processing.

“We’re starting to see that more and more library leaders, archivists, and special collections folks are leaning in to say ‘we have to make a difference here. We can’t be okay with this backlog. How can we innovate in this space?’ And we want to be there to help build solutions accordingly.”

Beta testing: Refining JSTOR Seeklight through real-world use

By late 2024, JSTOR was ready to move beyond one-off demos and into a structured beta program focused on JSTOR Seeklight.

“A one-hour demo is an effective overview, but we needed more in-depth feedback. The beta program allowed us to work closely with institutions—testing features in real environments, learning how they fit into actual workflows, and refining JSTOR Seeklight based on real-world evidence.”

This phase included:

- Site visits to institutions like the University of Chicago and Vanderbilt, where JSTOR’s team observed archivists at work, facilitated workshops to map out workflows, shared experiments in progress, and discussed pain points.

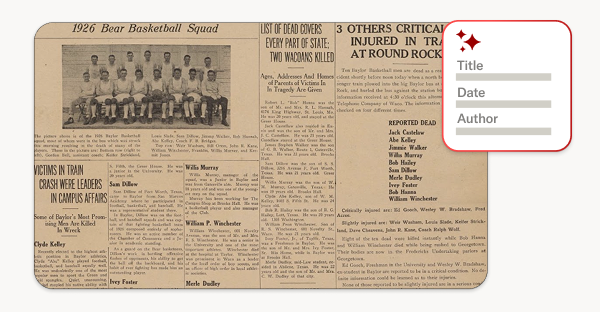

- Live testing with participants using their own materials, helping refine the ability of JSTOR Seeklight to process a wide range of archival materials.

- Weekly feedback loops, ensuring that discoveries from beta testers directly informed improvements.

One major discovery was that JSTOR Seeklight was surprisingly good at reading handwriting.

“We expected JSTOR Seeklight to struggle with handwritten documents, but in many cases, it outperformed our expectations.”

JSTOR Seeklight also excelled at generating summaries in aggregate, which can help archivists quickly build collection guides, and at processing non-English languages with impressive accuracy, an immediate benefit for many institutions.

Beta participants reported significant time savings, too:

“JSTOR Seeklight reduces time to create item-level descriptive metadata by up to 95%—including time to review and edit—with an average time savings of around 86%, transforming workflows that once took as long as 45 minutes into a matter of minutes.”

In the world of special collections and archives, every minute saved is a minute regained for the higher-order work that archivists and librarians are trained for, but often have to sideline in favor of clerical tasks.

From beta to charter: A long-term commitment

As testing progressed, JSTOR’s team realized something crucial: metadata generation alone wasn’t enough. JSTOR Seeklight needed to integrate into broader workflows for managing, preserving, and sharing.

This led to the development of an integrated system within JSTOR Digital Stewardship Services, ensuring metadata creation via JSTOR Seeklight was part of a comprehensive approach to collection management.

“The question isn’t just ‘How do we generate metadata?’ It’s ‘How does JSTOR Seeklight assist experts who will review, edit, and enable access?’”

This shift shaped the JSTOR Digital Stewardship Services charter program, launching in April 2025—a structured initiative designed to continue refining JSTOR Seeklight in close collaboration with the community.

“The charter isn’t just about giving institutions access to a finished product,” Saville explains. “It’s about learning together. This isn’t a static technology, it’s an evolving system, built with, and for, the community.”

Why this story matters

The evolution from concept to development, beta, and now charter has been shaped by deep collaboration, listening, and co-creation.

“We didn’t sit in a room and invent something in isolation,” Saville reflects. “This tool exists because librarians, archivists, and metadata experts told us what they needed, and then helped us build it.”

Saville emphasizes that this work is far from over.

“We’ve come so far in such a short time, and we have enthusiastic support for our long-term vision. The launch isn’t the end. It’s the beginning of the next phase in development.”

Libraries and archives continue to face mounting challenges in processing digital assets, but JSTOR Seeklight was developed by those who understand the realities of the work—and it will continue to evolve alongside their needs.

Interested in shaping the future of AI-assisted stewardship with JSTOR Seeklight? Learn more about JSTOR Digital Stewardship Services.