Defining a new, community-driven tool for transforming the discovery of distinctive collections

When archivists and librarians responsible for special collections talk about collections processing, they’re generally referring to the continuum of work that transforms materials into accessible, discoverable collections. This includes a broad set of activities that are nearly impossible to fully address given resource constraints, and are optimized for discovery and use in a primarily tangible environment where finding aids serve as core entry points.

As a result, many institutions operate with substantial processing backlogs that delay discovery and access—and center collection-level practices that do not address today’s granular, item-level discovery needs. This leaves significant portions of distinctive collections underused, and their potential value unrealized. Accelerating processing while also enabling item-level discovery is a daunting challenge.

Recent technological advances have, however, opened new possibilities for processing collections at scale in ways that can dramatically expand discovery and improve accessibility for all users. This opens up the opportunity to enable stewardship that reflects and serves scholarly and societal needs, overcoming institutional constraints and often-held professional assumptions about proper practice. Realizing that potential requires stewarding institutions and their professional practitioners to seek and adapt new tools and approaches—and the organizations supporting their work to collaborate with them in developing those tools. My colleagues and I are germinating the first in a new category of digital services that is just coming online—what we call a collections processing tool.

The proud history of processing

The spectrum of activities to which collections processing refers can vary across material types and collections. It includes appraisal, arrangement, and accession; rehousing and conservation; creating finding aids or other cataloging; metadata generation and scanning; screening for sensitive content, and so forth.

While these steps are familiar, they’re not static. Rather, they’re continually shaped by the conditions under which they’re conducted: the professional values that archivists bring to their work, the shifting institutional priorities that frame that work, the technological environments in which collections are used, and the realities of resources and capacity.

Realizing that potential requires stewarding institutions and their professional practitioners to seek and adapt new tools and approaches—and the organizations supporting their work to collaborate with them in developing those tools.

Over time, these pressures have contributed to the processing backlogs that are so prevalent today. Often, these backlogs are partially obscured by the presence of stub or minimally processed records—useful for inventory control, but insufficient to enable real discovery, context, or use. But even among collections that are nominally discoverable, only a small fraction have been digitized. For many libraries and archives, large-scale digitization today feels as daunting (or unnecessary) as it did when it initially appeared to our community decades ago in the context of journals, and then books. While demand for digital access continues to grow, shared and sustainable approaches to digitization at scale remain underimagined, leaving much potential for expanded discovery and use unrealized.

One widely adopted response to these constraints is the “More Product, Less Process” (MPLP) approach, first articulated in 2005. As my colleague, the archivist and curator Emilie Hardman, describes it in Bridging Capacity and Care: A Field Report on Archives and Special Collections, MPLP “emphasizes pragmatic, baseline description over the cultivation of granular detail,” and “represented a pivotal shift in archival processing, marking a move away from exhaustive item-level description toward more efficient methodologies.” The ideas of minimal processing continue to inform digital practice, as illustrated by Delivering Archives and Digital Objects: A Conceptual Model (DadoCM), a minimal, system-agnostic framework for describing digital objects according to archival principles.

Finding aids have remained central in this context, providing essential entry-points to archival collections. Over time, the related work has evolved—from creating paper finding aids, to digitally transforming and reformatting them, to marking up with EAD (Encoded Archival Description) for online discovery. While each stage has improved digital discoverability, the work itself remains time-consuming, labor-intensive, and ultimately incomplete: even in digital form, finding aids offer only a limited set of structured pathways into collections. Rules and standards such as DACS (Describing Archives: A Content Standard) and influential frameworks like MPLP have always adapted to meet the discovery needs of their time. And discovery is itself changing. Researchers today expect granular searchability, immediate digital access, high-quality full text for accessibility, and intelligent discovery—qualities not supported by minimal processing techniques. These are not simply modern expectations, however; they are emerging as prerequisites for equitable participation in contemporary scholarship, especially as travel budgets constrict, and the demand for open, public-facing resources grows. As those needs evolve, so too must the frameworks that guide how collections are processed, described, and made usable.

A digitization-first approach

Some parts of processing—such as format stabilization, condition assessment, and other forms of physical care—will always remain hands-on. No technology will ever replace that. But the steps closest to discovery—identifying what something is, what it contains, how it relates to other materials, and how that information is conveyed to users—present real opportunities for new approaches.

Processing workflows—including the very order of operations—were created under a set of technological realities and constraints. If those realities no longer hold, and corresponding workflows no longer optimally serve discovery and impact, they should evolve. This includes re-examining the long-standing practice of describing materials before digitizing them, based on the premise that metadata is necessary in order to create digital collections. Indeed, some in the field have already begun rethinking this sequence through scan-to-describe and backlog-reduction pilots, rapid capture programs, and transcript-led description for audiovisual materials. These experiments demonstrate the potential of digitize-and-describe workflows to make discovery faster and more equitable.

The approach outlined here builds on these emerging community practices: what if digitization, paired with intelligent recognition, became the starting point? Once materials are digitized (or available born-digital), that digital foundation can support subsequent description, access, and, crucially, ongoing reprocessing and refinement as technologies, standards, and institutional priorities evolve.

Processing for discovery and impact

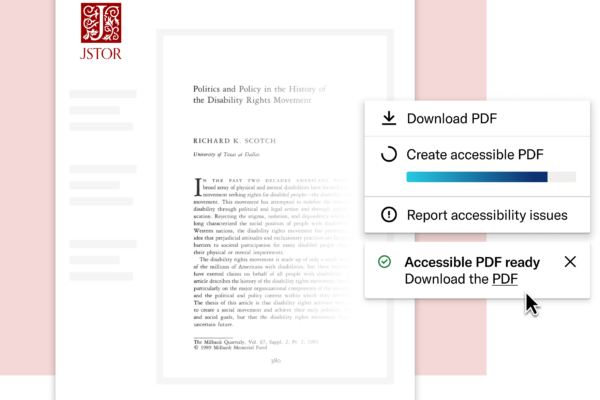

This approach draws on a key lesson from JSTOR’s experience making journals and books digitally accessible: metadata matters for certain valuable entry-points, but full text drives substantial discovery and usage. Today, metadata and full text should work together to drive discovery that is both precise and highly relevant. In the longer term, as discovery systems draw meaning directly from content and its connections, models may move beyond metadata, enabling richer, more intelligent pathways into research—a possibility that underscores both the near- and long-term value of full text.

The opportunity of a digitization-first process is to optimize discovery and access today, while laying a foundation for future innovation in stewardship and scholarship. In such a process, a group of archival records, for example, are scanned, and the resulting files loaded into a collections processing tool that:

- Generates accurate full-text or transcript, which is enormously impactful for item-level discovery

- Creates item-level metadata, which enables discovery based on description

- Drafts a collection-level summary, providing intelligence that can be valuable for assessment, and the starting point for a finding aid

These are a few of the tasks such a tool can help with today. Over time, one can envision it becoming far more capable within the full set of a practitioner’s processing activities.All this is supervised by an archivist or librarian who manages the tool and reviews and edits its outputs as necessary. That practitioner is able to process collections faster than using traditional methods, while the collections are more discoverable and far more impactful than they otherwise would be. This is what a digitization-first process, leaning on a collections processing tool, enables.

Not just processing but maintaining

In resource-constrained environments where MPLP is the norm, it can be daunting to even consider the maintenance work necessary for ensuring discoverability and access over time.

The opportunity of a digitization-first process is to optimize discovery and access today, while laying a foundation for future innovation in stewardship and scholarship.

Collections may need to be updated to reflect new accessibility standards, re-described through a reparative lens, or surfaced through full text that was previously unavailable. Each of these priorities requires recognizing collections processing not as a one-time activity, but as an ongoing element of stewardship. In many ways, this iterative model fulfills a vision long present but rarely realized in archival practice—one anticipated in frameworks like MPLP, which emphasized the importance of returning to and refining description over time. New tools can deliver on that promise, extending care for collections and their use in ways once aspirational, but now increasingly achievable.

Collections processing tools can support this ongoing work as part of a holistic stewardship strategy, enabling practitioners to maintain, enhance, and refresh digital collections as an integral part of their practice.

A new role for professionals

In adopting and managing collections processing tools, professional practitioners have the opportunity to improve the outcomes to which collection stewards are committed. In many ways, this evolution parallels how developments in cataloging infrastructure transformed general library practice—from card catalogs to OPACs and integrated discovery systems—introducing new tools that supported greater discoverability and reach at scale, while keeping professional judgment at the center.

Special collections, archives, and museum holdings have an array of systems and infrastructure to support their work, but they have long lacked comparable infrastructure to support description and discovery. Collections processing tools have the potential to close that gap, providing the same kind of infrastructure, scalability, and visibility for distinctive materials that general library systems bring to published scholarship.

While these tools can accelerate routine tasks, their greater promise lies in improving the quality and reach of discovery and in advancing accessibility. Addressing backlogs, expanding the capacity to collect, and enabling reprocessing all contribute to the foundational mission of libraries and archives: preserving and providing access to knowledge.

Using collection processing tools to accelerate and expand the impact of their collections, practitioners are empowered to engage the workflow differently. These tools augment core archival work by handling repetitive tasks and surfacing opportunities for human expertise—recognizing and transcribing text, flagging sensitive or duplicative content, and generating descriptive scaffolding that requires curatorial judgment and refinement. New technologies don’t replace professional expertise; they depend on it, ensuring that archivists remain central to the interpretive and ethical decisions that shape discovery.

A new community-driven category

Ultimately, a collections processing tool has several key characteristics but it is more than just a set of features. It:

- Anchors itself in the goals of discovery and impact, not just description, to increase the visibility and accessibility of distinctive collections

- Produces recognized text and descriptive scaffolding at scale as part of a growing array of tasks essential to processing

- Strengthens and extends the interpretive and decision-making roles of professionals

- Enables new workflows, including digitization-first approaches, that reflect the potential for extending impact

My colleagues and I are developing a collections processing tool—JSTOR Seeklight—in close partnership with a community of dozens of charter institutions and hundreds of individual librarians and archivists. Together, we are identifying and addressing new ways to serve the needs of collections stewards and users, and sharing what we learn with the community through public presentations, community engagement, and reflections on this blog.

New technologies don’t replace professional expertise; they depend on it, ensuring that archivists remain central to the interpretive and ethical decisions that shape discovery.

This work is deeply aligned with our organizational mission to expand access to knowledge and education. Through JSTOR Seeklight, we aim to extend that mission to the realm of archives and special collections—bringing them into digital reach not just more quickly, but more meaningfully and completely. We believe there is an opportunity to transform the discovery and impact of special collections and archives that is no less significant than JSTOR’s work 30 years ago to reanimate the dormant potential of journal backfiles through digitization. To do so, we are joining up JSTOR Seeklight’s promise as a collections processing tool with the reach of the JSTOR platform for discovery and access and the trustworthiness of Portico’s infrastructure for long-term preservation within the broader JSTOR Stewardship ecosystem.

We are proud and humbled to be working with our community to co-create JSTOR Seeklight and define this new category together. Please follow our progress here on this blog and let us know how our investments can contribute to your work. If you are interested in joining us and learning together, you can learn more about our charter program and how to get involved. And, as ever, if you would like to share your experience or speak directly, I would love to hear from you.